The development of AI systems is progressing rapidly. Much of the development is attributed to better language models, but primarily other factors drive this development. MCP is one such factor that has received some attention recently.

Although language models themselves can be used to solve many tasks, they have some limitations:

- They are only a representation of the training data, not reality. Language models themselves do not have access to real-time data, and any errors in the data foundation are reflected in the model. Language models are not fact models.

- Language models are only trained on publicly available data. Language models do not have knowledge of, or access to your company’s data.

- Language models cannot interact with the outside world.

You may not be familiar with these limitations because you have experienced that, for example, ChatGPT can search the web. ChatGPT is not a language model, but a chat service. It is not the language model that searches the internet; it is the chat application. Several other services have also entered the market that give the impression that language models have acquired completely new and groundbreaking abilities. The common denominator here is a completely new protocol called Model Context Protocol, MCP. To better understand MCP, let’s take a look at the development in AI tools from November 2023, up until today.

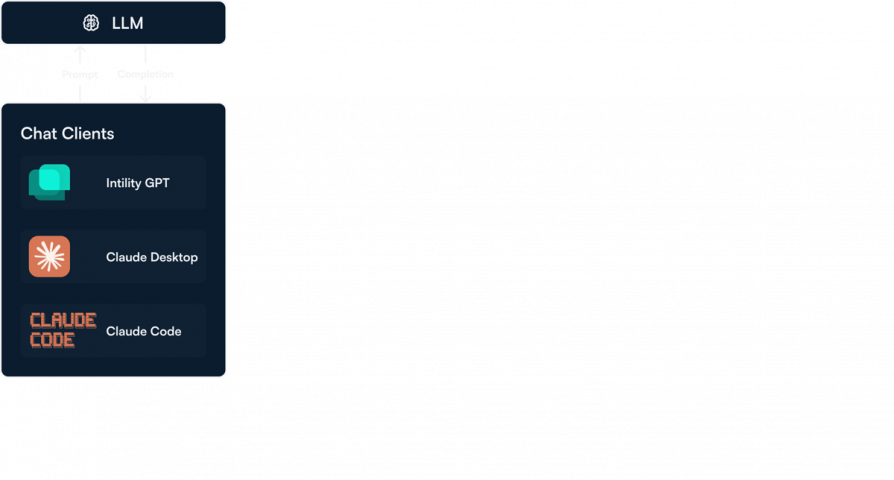

Simple Interaction with Language Model

Almost all AI chat services followed this relatively simple architecture in the beginning. A chat service like, for example, Intility GPT consisted of 2 main components:

- Chat client: Manages user input, message history, etc.

- Large Language Model (LLM): Receives input (prompt) and generates an output (completion).

Such services worked excellently for tasks such as translation, summarization, analysis of large amounts of text, troubleshooting code, etc. Many tasks today, and in the future, will be solved with such a service.

However, there are several tasks that such a simple service cannot solve. Questions that require access to private, proprietary information, internet searches for real-time information, or performing actions in an external environment were not possible. The expansion of the architecture with tools was the solution to this.

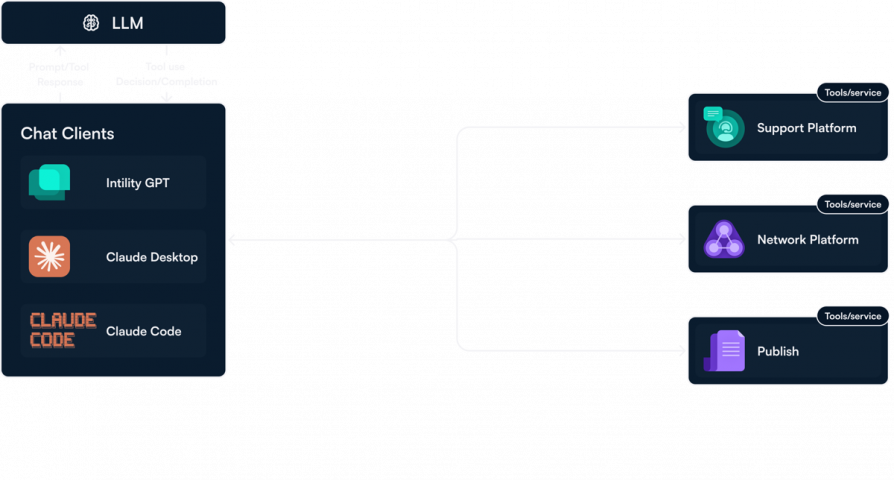

Language Model + Tools

The introduction of tools initially had nothing to do with the language model; it was an extension of the chat clients. Instead of just communication between the chat client and the language model, we have this flow:

- User input: Can you help me with XYZ?

- The language model responds with either:

- I can’t answer this, but use tool XXX with input YYY and send the result back to me so I can assist you

- Of course, I can help you with XYZ.

- Depending on what happened in the previous step, the chat client will either:

- Call tool XXX with input YYY and send the result back to the language model. The language model will generate a response that is presented to the user.

- Present the answer to the user

With this extension, the chat service has more uses and can solve even more problems. Examples of commonly used tools are:

- Web search

- Document search and processing

- Code execution

- Image generation

In theory, there are no limitations on what tools can be created and made available for a chat client. You can attach as many documents, specialized applications, and private data sources as you like, as long as a developer can write code for it.

Although this sounds very promising, there are some important limitations:

- The tools you create are closely tied to the client. There is no way to share these tools with other clients, leading to code duplication.

- There is no simple way to manage access and permissions on a large scale. The responsibility for implementing access control is left to the client developer. This poses a security threat.

These limitations have led to the inability to seamlessly integrate professional applications into services like Intility GPT. This is where MCP comes in from the sidelines to handle these challenges.

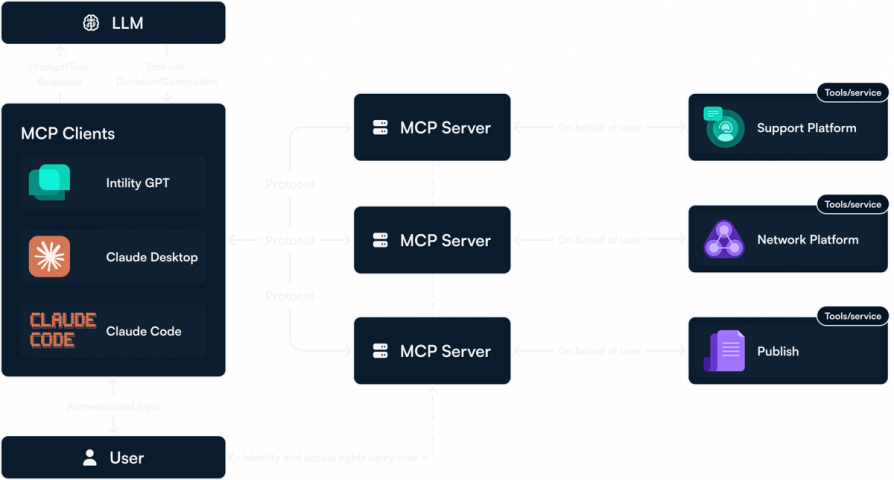

Language Model + MCP

The architecture with MCP is quite similar, but we have a new component in our diagram: MCP Server. The MCP Server functions as a standardized bridge between the client and external systems. Some other important changes in the diagram above:

- Chat Client to MCP Clients: The client must support the protocol, and all clients that do are called MCP Clients. An MCP Client can communicate with any MCP Server.

- User: We have included the user, and how the user’s identity is utilized between the MCP Server and the external service. It is very important that the MCP Server uses the user context to maintain the user’s rights.

The power of MCP lies in that if you have an MCP Client, you can use any MCP Server.

Who creates the MCP Server?

In theory, anyone can create an MCP Server. The only requirement is programmatic access to the system or data source you will be working against. Typically, you see 3 variants:

- Application provider creates an MCP Server for their application. Examples that exist today are GitHub, PayPal, and Stripe.

- Community/MCP as a Service: There is a large selection of MCP Servers available as open source that can be run on your own infrastructure at no cost. There are also providers of MCP Servers that offer this for a fee.

- Create it yourself. As long as the system you are targeting has an API, you can create MCP Servers yourself. This allows you to tailor it to your own needs.

Build for the Future and Avoid “Vendor Lock-In”

MCP is an open standard that separates the integration itself (the MCP server) from both the client and the language model. This means that the connections to your systems are not embedded in one specific chat service or a vendor-specific API. In short, you build once and reuse across tools and environments.

An MCP server can be used from various MCP clients and with different models. MCP servers can also be run where the data resides (on-prem, sovereign, public cloud, edge) and securely exposed via the protocol.